Spark cluster from your python environment¶

Install¶

Install ods package using pip command.

$ pip install ods

And let’s get an access token and set STAROID_ACCESS_TOKEN environment variable.

$ export STAROID_ACCESS_TOKEN="<your access token>"

For alternative ways to configure access token, check staroid-python.

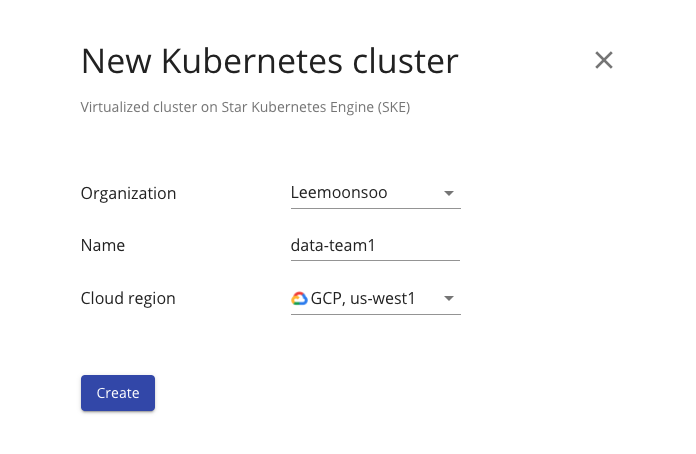

Create Kubernetes cluster¶

staroid.com -> Products -> Kubernetes (SKE) -> New Kubernetes cluster.

And configure kubernetes cluster name after import python library.

import ods

# 'ske' is the name of kubernetes cluster created from staroid.com.

# Alternatively, you can set the 'STAROID_SKE' environment variable.

ods.init(ske="data-team1")

Create PySpark session¶

Spark-serverless enables you to create an interactive PySpark sessions with executors running on the cloud remotely.

import ods

# 'ske' is the name of kubernetes cluster created from staroid.com.

# Alternatively, you can set the 'STAROID_SKE' environment variable.

ods.init(ske="data-team1")

# get saprk session with 3 initial worker nodes, delta lake enabled

spark = ods.spark("my-cluster", worker_num=3, delta=True).session()

# Do your work with Spark session

df = spark.read.load(...)

Now you can use Spark session with 3 remotely running executors.

Note

There’s no application packaging and submit step required. Everything runs interactively.